| name | ip | |

| elasticsearch_node1 | 172.18.0.201 | master |

| elasticsearch_node2 | 172.18.0.202 | node |

| elasticsearch_node3 | 172.18.0.203 | node |

修改内核参数

# 直接执行 sysctl -w vm.max_map_count=262144 # 在/etc/sysctl.conf文件最后添加一行 永久生效 vm.max_map_count=262144

创建一个新的网段(elasticsearch_net)给Elasticsearch集群使用

docker network create --subnet=172.18.0.0/16 elasticsearch_net

运行一个临时Elasticsearch 容器,用于拷贝一些必须的文件

docker run -d --name elasticsearch -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" elasticsearch:7.9.3

docker cp elasticsearch:/usr/share/elasticsearch/config /elasticsearch/node1/ docker cp elasticsearch:/usr/share/elasticsearch/config /elasticsearch/node2/ docker cp elasticsearch:/usr/share/elasticsearch/config /elasticsearch/node3/

创建容器共享卷,容器间可以互相访问

docker volume create --name BackupVolume

部署 Elasticsearch 集群

docker run -d \ --name=elasticsearch_node1 \ --restart=always \ --net elasticsearch_net \ --ip 172.18.0.201 \ --ulimit memlock=-1:-1 \ -p 127.0.0.1:9201:9200 \ -p 127.0.0.1:9301:9300 \ -v BackupVolume:/elasticsearch/backup \ -v /elasticsearch/node1/plugins:/usr/share/elasticsearch/plugins \ -v /elasticsearch/node1/data:/usr/share/elasticsearch/data \ -v /elasticsearch/node1/logs:/usr/share/elasticsearch/logs \ -v /elasticsearch/node1/config:/usr/share/elasticsearch/config \ -e bootstrap.memory_lock=true \ -e node.data=true \ -e node.name=elasticsearch_node1 \ -e node.master=true \ -e network.host=elasticsearch_node1 \ -e discovery.seed_hosts=elasticsearch_node1 \ -e cluster.initial_master_nodes=elasticsearch_node1 \ -e cluster.name=es-cluster \ -e "ES_JAVA_OPTS=-Xms256m -Xmx256m" \ -e http.cors.allow-origin=* \ -e http.cors.enabled=true \ elasticsearch:7.9.3

docker run -d \ --name=elasticsearch_node2 \ --restart=always \ --net elasticsearch_net \ --ip 172.18.0.202 \ --ulimit memlock=-1:-1 \ --volumes-from=elasticsearch_node1 \ -p 127.0.0.1:9202:9200 \ -p 127.0.0.1:9302:9300 \ -v /elasticsearch/node2/plugins:/usr/share/elasticsearch/plugins \ -v /elasticsearch/node2/data:/usr/share/elasticsearch/data \ -v /elasticsearch/node2/logs:/usr/share/elasticsearch/logs \ -v /elasticsearch/node2/config:/usr/share/elasticsearch/config \ -e bootstrap.memory_lock=true \ -e node.data=true \ -e node.name=elasticsearch_node2 \ -e node.master=true \ -e network.host=elasticsearch_node2 \ -e discovery.seed_hosts=elasticsearch_node1 \ -e cluster.initial_master_nodes=elasticsearch_node1 \ -e cluster.name=es-cluster \ -e "ES_JAVA_OPTS=-Xms256m -Xmx256m" \ -e http.cors.allow-origin=* \ -e http.cors.enabled=true \ elasticsearch:7.9.3

docker run -d \ --name=elasticsearch_node3 \ --restart=always \ --net elasticsearch_net \ --ip 172.18.0.203 \ --ulimit memlock=-1:-1 \ --volumes-from=elasticsearch_node1 \ -p 127.0.0.1:9203:9200 \ -p 127.0.0.1:9303:9300 \ -v /elasticsearch/node3/plugins:/usr/share/elasticsearch/plugins \ -v /elasticsearch/node3/data:/usr/share/elasticsearch/data \ -v /elasticsearch/node3/logs:/usr/share/elasticsearch/logs \ -v /elasticsearch/node3/config:/usr/share/elasticsearch/config \ -e bootstrap.memory_lock=true \ -e node.data=true \ -e node.name=elasticsearch_node3 \ -e node.master=true \ -e network.host=elasticsearch_node3 \ -e discovery.seed_hosts=elasticsearch_node1 \ -e cluster.initial_master_nodes=elasticsearch_node1 \ -e cluster.name=es-cluster \ -e "ES_JAVA_OPTS=-Xms256m -Xmx256m" \ -e http.cors.allow-origin=* \ -e http.cors.enabled=true \ elasticsearch:7.9.3

chmod -R 777 /elasticsearch/node1/* chmod -R 777 /elasticsearch/node2/* chmod -R 777 /elasticsearch/node3/*

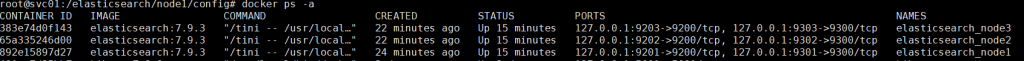

查看容器运行状态运行

docker ps -a

修改 elasticsearch 配置文件

vim /elasticsearch/node1/config/elasticsearch.yml vim /elasticsearch/node2/config/elasticsearch.yml vim /elasticsearch/node3/config/elasticsearch.yml

#添加 path.repo: /elasticsearch/backup

重启 elasticsearch

docker restart elasticsearch_node1 && docker restart elasticsearch_node1 && docker restart elasticsearch_node1

进入主节点,修改快照备份目录权限

docker exec -it elasticsearch_node1 /bin/bash chown -R elasticsearch /elasticsearch/ exit

创建快照备份仓库:

curl -H "Content-Type: application/json" -XPUT http://127.0.0.1:9201/_snapshot/backup -d '

{

"type":"fs",

"settings":{"location":"/elasticsearch/backup"},

"max_snapshot_bytes_per_sec" : "50mb",

"max_restore_bytes_per_sec" : "50mb"

}'

给我们的仓库取一个名字,在本例它叫 backup

我们指定仓库的类型应该是一个共享文件系统。

最后,我们提供一个已挂载的设备作为目的地址。

返回:{“acknowledged”:true} 代表成功

查询备份仓库

curl -XGET 'http://127.0.0.1:9201/_snapshot?pretty'

{

"backup" : {

"type" : "fs",

"settings" : {

"location" : "/elasticsearch/backup"

}

}

}

编写备份脚本

vim /tmp/es_backup.sh

#!/bin/bash

#功能:用于备份elasticsearch的全索引快照,保留2天的备份快照。

#2天前的日期

B_DATA=$(date -d "2 day ago" +%F)

#脚本运行日志

LOG_FILE="/tmp/es_backup.log"

#运行脚本的当前时间

CUR_TIME=$(date +%F_%H-%M-%S)

#仓库名称

STORE_NAME="backup"

#快照名称

SNAPSHOT_PRE="snapshot_all"

SNAPSHOT_NAME="${SNAPSHOT_PRE}_${CUR_TIME}"

#快照API

Snap_API="http://127.0.0.1:9201"

#curl的绝对路径

CURL_CMD="/usr/bin/curl"

#生成快照

echo "=====${SNAPSHOT_NAME}=====开始快照es" >> ${LOG_FILE}

#执行命令后阻塞等快照完成

#${CURL_CMD} -XPUT "${Snap_API}/_snapshot/${STORE_NAME}/${SNAPSHOT_NAME}?wait_for_completion=true" >> ${LOG_FILE}

#执行命令后立即返回,备份快照会在后台运行

${CURL_CMD} -XPUT "${Snap_API}/_snapshot/${STORE_NAME}/${SNAPSHOT_NAME}" >> ${LOG_FILE}

echo "=====${SNAPSHOT_NAME}=====结束快照es" >> ${LOG_FILE}

#删除2天前老的快照

for snap_name in $(${CURL_CMD} -sXGET "${Snap_API}/_snapshot/${STORE_NAME}/_all" | python3 -m json.tool | grep '"snapshot":' | awk -F'[:",]' '{print $5}'|grep ${SNAPSHOT_PRE} | grep "${B_DATA}")

do

${CURL_CMD} -XDELETE "${Snap_API}/_snapshot/${STORE_NAME}/${snap_name}"

if [ $? -eq 0 ];then

echo "删除快照:${snap_name} success" >> ${LOG_FILE}

else

echo "删除快照:${snap_name} fail" >> ${LOG_FILE}

fi

done

chmod +x /tmp/es_backup.sh

创建计划任务每天定时备份(备份索引存放在 /var/lib/docker/volumes/BackupVolume/_data/ )

crontab -e

0 0 * * * /tmp/es_backup.sh

查看所有备份全索引的信息

curl -XGET "http://127.0.0.1:9201/_snapshot/backup/_all" | python3 -m json.tool

文章评论