环境介绍:

- filebeat:7.9.1

- redis:版本无要求

- logstash:7.17.11

- elasticsearch:7.9.1

- kibana:7.9.1

- grafana:6.6.2

nginx 日志格式

- 请保证 nginx 使用该字段,名称如果有修改,grafana 模板需要做一定修改

log_format aka_logs

'{"@timestamp":"$time_iso8601",'

'"host":"$hostname",'

'"server_ip":"$server_addr",'

'"client_ip":"$remote_addr",'

'"xff":"$http_x_forwarded_for",'

'"domain":"$host",'

'"url":"$uri",'

'"referer":"$http_referer",'

'"args":"$args",'

'"upstreamtime":"$upstream_response_time",'

'"responsetime":"$request_time",'

'"request_method":"$request_method",'

'"status":"$status",'

'"size":"$body_bytes_sent",'

'"request_body":"$request_body",'

'"request_length":"$request_length",'

'"protocol":"$server_protocol",'

'"upstreamhost":"$upstream_addr",'

'"file_dir":"$request_filename",'

'"http_user_agent":"$http_user_agent"'

'}';

安装redis

参考:https://tech.sharespace.top/redis/

filebeat 配置

安装filebeat

ubuntu

add-apt-repository "deb https://artifacts.elastic.co/packages/7.x/apt stable main" apt update apt install filebeat=7.9.1

centos

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

sudo tee /etc/yum.repos.d/elastic-7.x.repo <<EOF [elastic-7.x] name=Elastic repository for 7.x packages baseurl=https://artifacts.elastic.co/packages/7.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md EOF

sudo yum install -y filebeat-7.9.1

配置filebeat

vim /etc/filebeat/filebeat.yml

#=========================== Filebeat inputs =============================

filebeat.inputs:

# 收集nginx日志

- type: log

enabled: true

paths:

- /data/wwwlogs/*_nginx.log

# 日志是json开启这个

json.keys_under_root: true

json.overwrite_keys: true

json.add_error_key: true

#-------------------------- Redis output ------------------------------

output.redis:

hosts: ["host"] #输出到redis的机器

password: "password"

key: "nginx_logs" #redis中日志数据的key值ֵ

db: 0

timeout: 5

sudo systemctl start filebeat sudo systemctl enable filebeat

logstash 配置

安装logstash

ubuntu

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-7.x.list sudo apt-get update sudo apt-get install -y logstash=1:7.17.11-1

centos

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

sudo tee /etc/yum.repos.d/elastic-7.x.repo <<EOF

[elastic-7.x]

name=Elastic repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

sudo yum install -y logstash-7.17.1

配置logstash

vim /etc/logstash/conf.d/filebeat-nginx.conf

input {

# redis nginx key

redis {

data_type =>"list"

key =>"nginx_logs"

host =>"redis"

port => 6379

password => "password"

db => 0

}

}

filter {

geoip {

#multiLang => "zh-CN"

target => "geoip"

source => "client_ip"

database => "/usr/share/logstash/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

# 去掉显示 geoip 显示的多余信息

remove_field => ["[geoip][latitude]", "[geoip][longitude]", "[geoip][country_code]", "[geoip][country_code2]", "[geoip][country_code3]", "[geoip][timezone]", "[geoip][continent_code]", "[geoip][region_code]"]

}

mutate {

convert => [ "size", "integer" ]

convert => [ "status", "integer" ]

convert => [ "responsetime", "float" ]

convert => [ "upstreamtime", "float" ]

convert => [ "[geoip][coordinates]", "float" ]

# 过滤 filebeat 没用的字段,这里过滤的字段要考虑好输出到es的,否则过滤了就没法做判断

remove_field => [ "ecs","agent","host","cloud","@version","input","logs_type" ]

}

# 根据http_user_agent来自动处理区分用户客户端系统与版本

useragent {

source => "http_user_agent"

target => "ua"

# 过滤useragent没用的字段

remove_field => [ "[ua][minor]","[ua][major]","[ua][build]","[ua][patch]","[ua][os_minor]","[ua][os_major]" ]

}

}

output {

elasticsearch {

hosts => "es-master"

user => "elastic"

password => "password"

index => "logstash-nginx-%{+YYYY.MM.dd}"

}

}

备注:

- hosts: elasticsearch 访问地址,在下一步中部署

- user: elasticsearch 账号(如果elasticsearch没设置可用#号注释掉)

- password: elasticsearch 密码 (如果elasticsearch没设置可用#号注释掉)

- index: elasticsearch索引名字(可自定义)

sudo systemctl start logstash sudo systemctl enable logstash

docker 部署elasticsearch、kibana

安装docker和docker-compose参考:https://tech.sharespace.top/docker/

vim docker-compose.yml

version: "3.0"

services:

elasticsearch:

container_name: es-container

image: docker.elastic.co/elasticsearch/elasticsearch:7.9.1

environment:

- xpack.security.enabled=false

- "discovery.type=single-node"

networks:

- es-net

ports:

- 9200:9200

kibana:

container_name: kb-container

image: docker.elastic.co/kibana/kibana:7.9.1

environment:

- ELASTICSEARCH_HOSTS=http://es-container:9200

- I18N_LOCALE=zh-CN

networks:

- es-net

depends_on:

- elasticsearch

ports:

- 5601:5601

networks:

es-net:

driver: bridge

docker-compose up -d

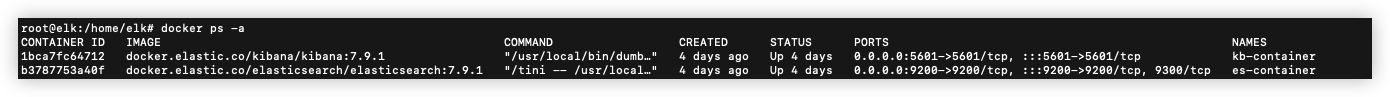

查看容器是否运行

docker ps -a

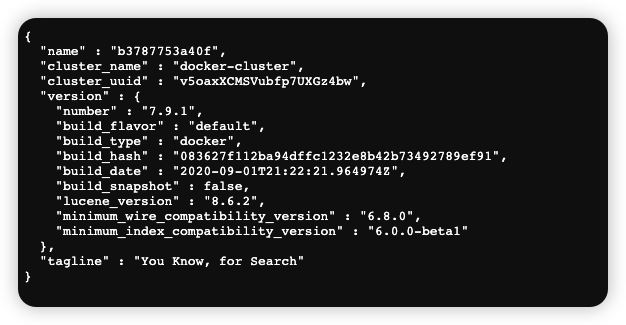

elasticsearch访问地址:http://ip:9200

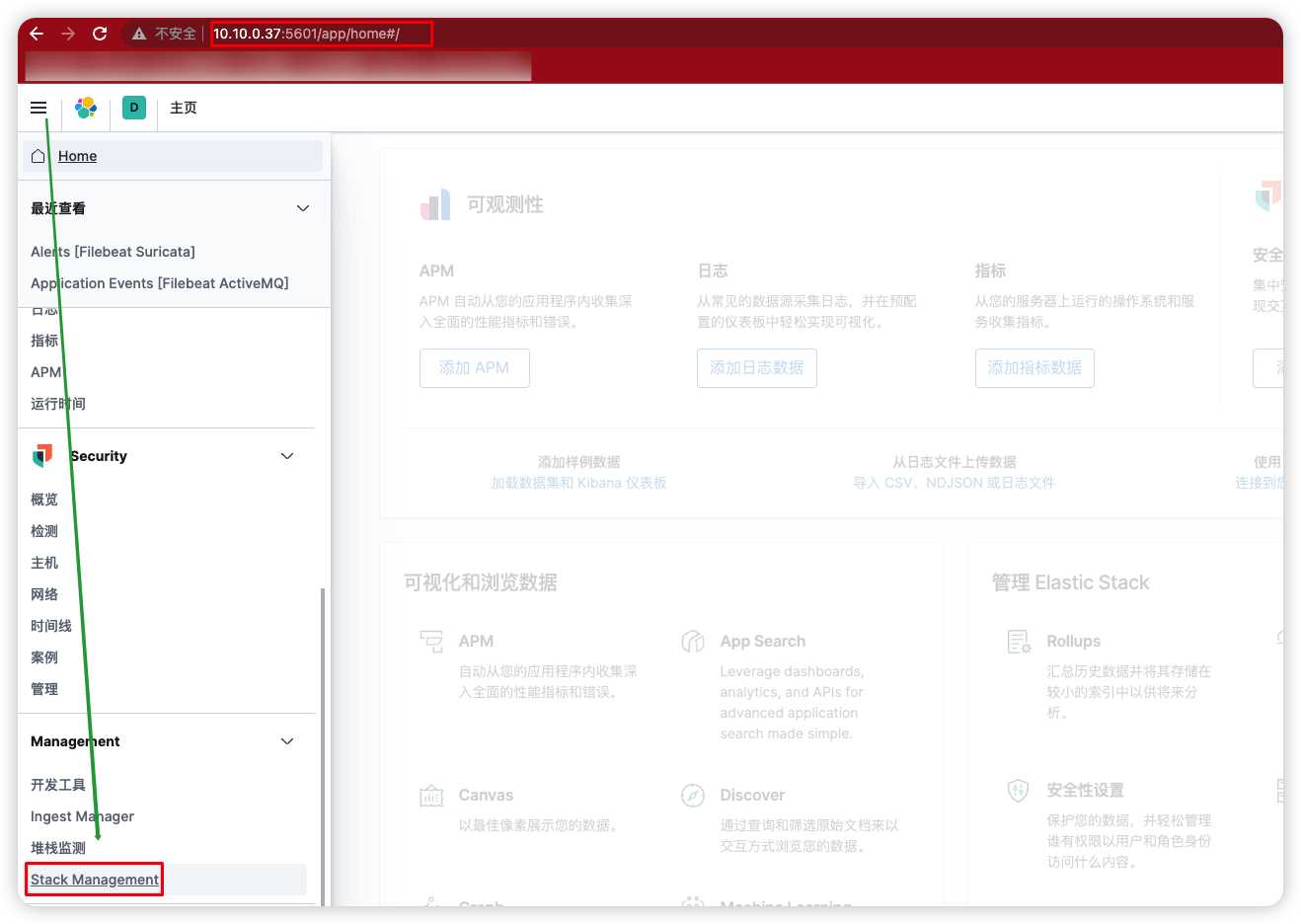

kibana访问地址:http://ip:5601 (kibana在我们这一套环境中很少用,我一般用来看elasticsearch索引)

docker 部署grafana

docker run -d -p 3000:3000 --name=grafana grafana/grafana:6.6.2

访问http://ip:3000,配置grafana,登陆账号密码默认是admin

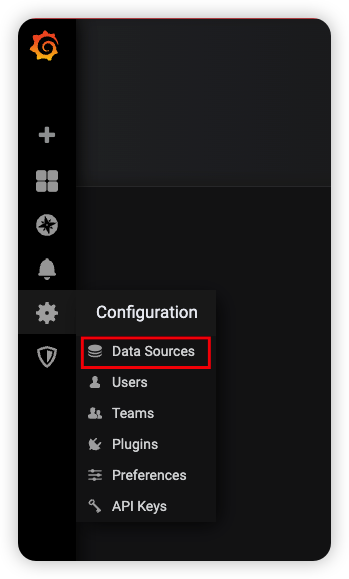

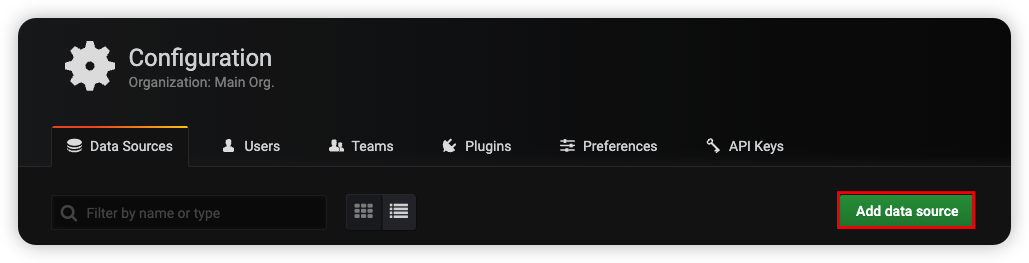

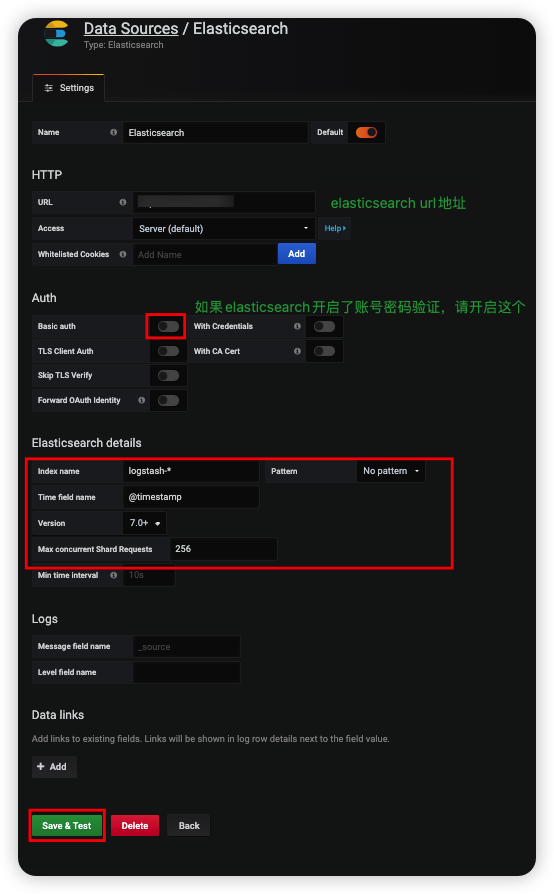

grafana与elasticsearch建立连接

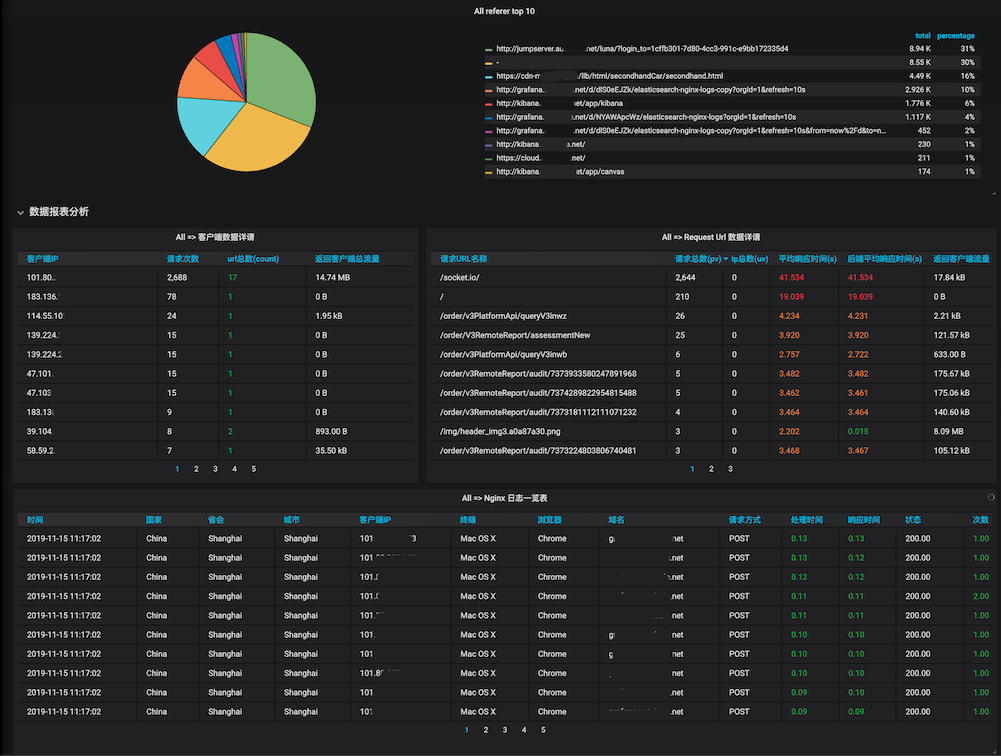

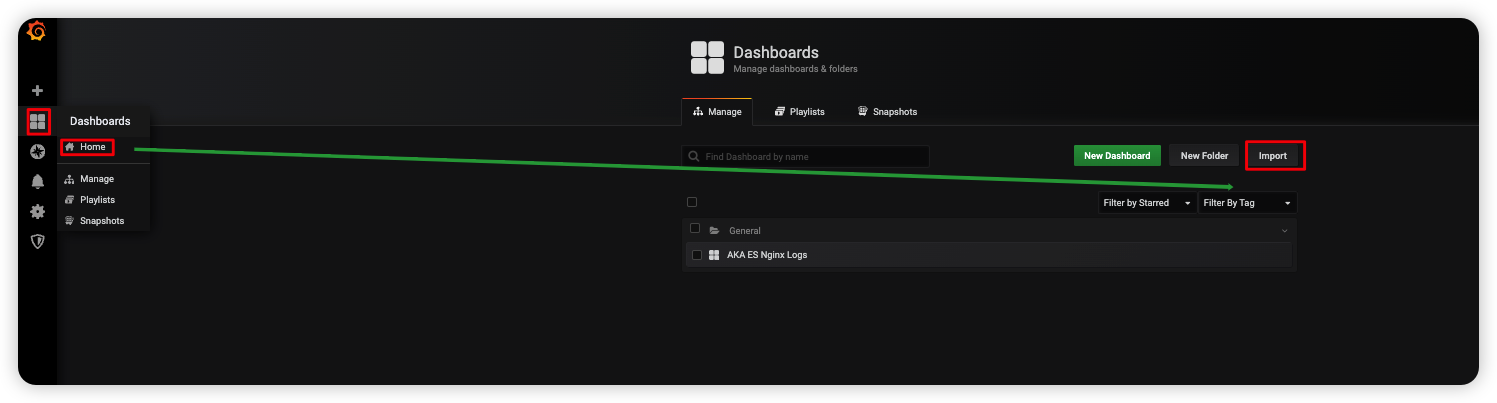

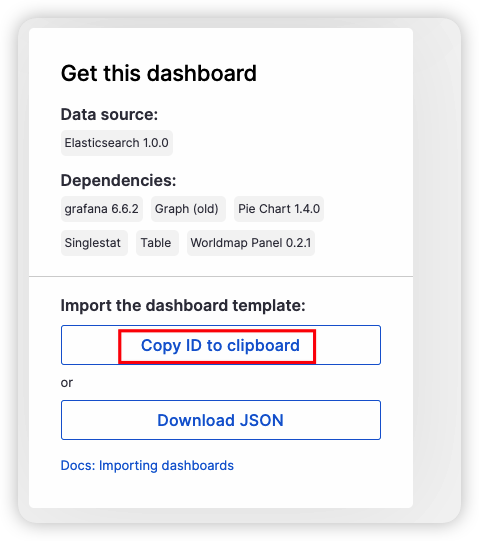

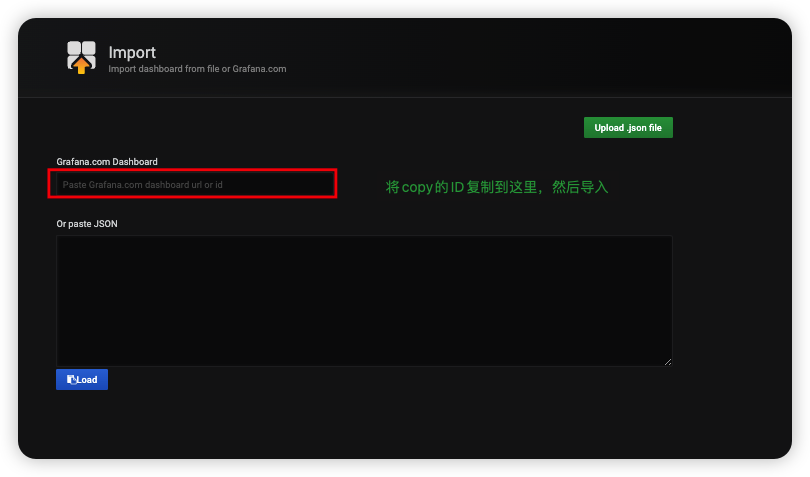

导入grafana nginx监控模版

访问:https://grafana.com/grafana/dashboards/11190-es-nginx-logs/ Copy ID

以防万一这里帮你们存一份json文件,导入进去即可

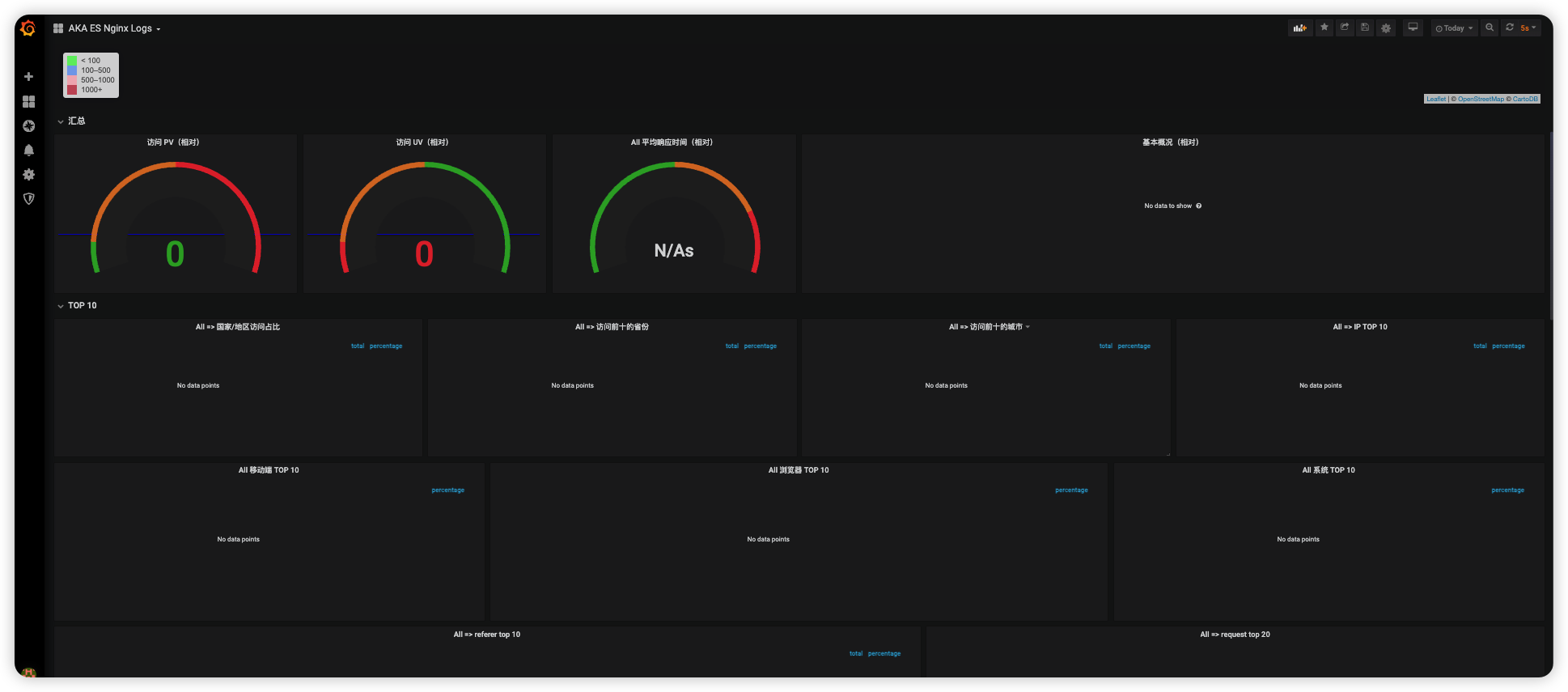

进去dashboard,查看图表

文章评论